(Page créée avec « {{Tuto Details |Description=<translate>Remember the opening scene of movie 'Project Almanac'? Controlling a drone with hand? Make it yourself, and to simplify, let's contr... ») |

|||

| Ligne 16 : | Ligne 16 : | ||

}} | }} | ||

{{Materials}} | {{Materials}} | ||

| + | {{Tuto Step | ||

| + | |Step_Title=<translate>Introduction</translate> | ||

| + | |Step_Content=<translate>Have you watched the movie 'Project Almanac'? Which was released in the year 2015. If not, then let me brief you a scene about it. | ||

| + | |||

| + | |||

| + | In the movie, the main character wishes to get into MIT and therefore, builds a project for his portfolio. The project was about a drone, that could be controlled using a 2.4GHz remote controller, but when the software application on the laptop was run, the main character was seen controlling the drone with his hands in the air! The software application used a webcam to track the the movement of the character's hand movements.</translate> | ||

| + | |Step_Picture_00=Visual_Gesture_Controlled_IoT_Car_1_-_Made_with_Clipchamp.gif | ||

| + | }} | ||

{{Tuto Step | {{Tuto Step | ||

|Step_Title=<translate>Custom PCB on your Way!</translate> | |Step_Title=<translate>Custom PCB on your Way!</translate> | ||

|Step_Content=<translate>Modern methods of development got easier with software services. For hardware services, we have limited options. Hence [http://www.pcbway.com/?from=akshayansinha PCBWay] gives the opportunity to get custom PCB manufactured for hobby projects as well as sample pieces, in very little delivery time | |Step_Content=<translate>Modern methods of development got easier with software services. For hardware services, we have limited options. Hence [http://www.pcbway.com/?from=akshayansinha PCBWay] gives the opportunity to get custom PCB manufactured for hobby projects as well as sample pieces, in very little delivery time | ||

| − | Get a discount on the first order of 10 PCB Boards. Now, [http://www.pcbway.com/?from=akshayansinha PCBWay] also offers end-to-end options for our products including hardware enclosures. So, if you design PCBs, get them printed in a few steps! | + | |

| − | |Step_Picture_00= | + | Get a discount on the first order of 10 PCB Boards. Now, [http://www.pcbway.com/?from=akshayansinha PCBWay] also offers end-to-end options for our products including hardware enclosures. So, if you design PCBs, get them printed in a few steps!</translate> |

| + | |Step_Picture_00=Visual_Gesture_Controlled_IoT_Car_ad_loop.gif | ||

| + | }} | ||

| + | {{Tuto Step | ||

| + | |Step_Title=<translate>Getting Started</translate> | ||

| + | |Step_Content=<translate>As we already saw, this technology was well displayed in the movie scene. And the best part is, in 2023 it is super easy to rebuild it with great tools like OpenCV and MediaPipe. We will control a machine but with a small change in the method, than the one the character uses to let the camera scan his fingers. | ||

| + | |||

| + | |||

| + | He used color blob stickers on his fingertips so that the camera could detect those blobs. When there was a movement in the hands, which was visible from the camera, the laptop sent the signal to the drone to move accordingly. This allowed him to control the drone without any physical console. | ||

| + | |||

| + | |||

| + | Using the latest technological upgrades, we shall make a similar, but much simpler version, which can run on any embedded Linux system, making it portable even for an Android system. Using OpenCV and MediaPipe, let us see how we can control our 2wheeled battery-operated car, over a Wi-Fi network with our hands in the air!</translate> | ||

| + | |Step_Picture_00=Visual_Gesture_Controlled_IoT_Car_2_-_Made_with_Clipchamp.gif | ||

| + | }} | ||

| + | {{Tuto Step | ||

| + | |Step_Title=<translate>OpenCV and MediaPipe</translate> | ||

| + | |Step_Content=<translate>OpenCV is an open-source computer vision library primarily designed for image and video analysis. It provides a rich set of tools and functions that enable computers to process and understand visual data. Here are some technical aspects. | ||

| + | |||

| + | * Image Processing: OpenCV offers a wide range of fuctions for image processing tasks such as filtering, enhancing, and manipulating images. It can perform operations like blurring, sharpening, and edge detection. | ||

| + | * Object Detection: OpenCV includes pre-trained models for object detection, allowing it to identify and locate objects within images or video streams. Techniques like Haar cascades and deep learning-based models are available. | ||

| + | * Feature Extraction: It can extract features from images, such as keypoints and descriptors, which are useful for tasks like image matching and recognition. | ||

| + | * Video Analysis: OpenCV enables video analysis, including motion tracking, background subtraction, and optical flow. | ||

| + | |||

| + | MediaPipe is an open-source framework developed by Google that provides tools and building blocks for building various types of real-time multimedia applications, particularly those related to computer vision and machine learning. It's designed to make it easier for developers to create applications that can process and understand video and camera inputs. Here's a breakdown of what MediaPipe does: | ||

| + | |||

| + | * Real-Time Processing: MediaPipe specializes in processing video and camera feeds in real-time. It's capable of handling live video streams from sources like webcams and mobile cameras. | ||

| + | * Cross-Platform: MediaPipe is designed to work across different platforms, including desktop, mobile, and embedded devices. This makes it versatile and suitable for a wide range of applications. | ||

| + | * Machine Learning Integration: MediaPipe seamlessly integrates with machine learning models, including TensorFlow Lite, which allows developers to incorporate deep learning capabilities into their applications. For example, you can use it to build applications that recognize gestures, detect facial expressions, or estimate the body's pose. | ||

| + | * Efficient and Optimized: MediaPipe is optimized for performance, making it suitable for real-time applications on resource-constrained devices. It takes advantage of hardware acceleration, such as GPU processing, to ensure smooth and efficient video processing. | ||

| + | |||

| + | From above if you have noticed, this project will require one feature from each of these tools, to be able to make our project work. Video Analysis from OpenCV and HandTracking from MediaPipe. Let us begin with the environment to be able to work seamlessly.</translate> | ||

| + | |Step_Picture_00=Visual_Gesture_Controlled_IoT_Car_ProjectAlmanac_Car.png | ||

| + | }} | ||

| + | {{Tuto Step | ||

| + | |Step_Title=<translate>Hand Tracking and Camera Frame UI</translate> | ||

| + | |Step_Content=<translate>As we move ahead, we need to know how to use OpenCV and Mediapipe to detect hands. For this part, we shall use the Python library. | ||

| + | |||

| + | Make sure you have Python installed on the laptop, and please run below command to install the necessary libraries - | ||

| + | |||

| + | Run the command to install the libraries -<syntaxhighlight lang="bash"> | ||

| + | python -m pip install opencv-python mediapipe requests numpy | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | To begin with the the control of car from the camera, let us understand how it will function - | ||

| + | |||

| + | * The camera must track the hands or fingers to control the movement of the car. We shall track the index finger on the camera for that. | ||

| + | * Based on the location of finger with reference to the given frame, there will be forward, backward, left, right and stop motion for the robot to function. | ||

| + | * While all the movements are tracked on real time, the interface program should send data while reading asynchronously. | ||

| + | |||

| + | To perform the above task in simple steps, methods used in the program have been simplified in beginner's level. Below is the final version! | ||

| + | |||

| + | As we see above, the interface is very simple and easy to use. Just move your index finger tip around, and use the frame as a console to control the robot. Read till the end and build along to watch it in action!</translate> | ||

| + | |Step_Picture_00=Visual_Gesture_Controlled_IoT_Car_1.jpg | ||

| + | }} | ||

| + | {{Tuto Step | ||

| + | |Step_Title=<translate>Code - Software</translate> | ||

| + | |Step_Content=<translate>Now that we know what the software UI would look like, let us begin to understand the UI and use HTTP request to send signal to the car to make actions accordingly. | ||

| + | |||

| + | '''Initializing MediaPipe Hands'''<syntaxhighlight lang="python3"> | ||

| + | mp_hands = mp.solutions.hands | ||

| + | hands = mp_hands.Hands() | ||

| + | mp_drawing = mp.solutions.drawing_utils | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | Here, we initialize the MediaPipe Hands module for hand tracking. We create instances of mp.solutions.hands and mp.solutions.drawing_utils, which provide functions for hand detection and visualization. | ||

| + | |||

| + | '''Initializing Variables'''<syntaxhighlight lang="python3"> | ||

| + | tracking = False | ||

| + | hand_y = 0 | ||

| + | hand_x = 0 | ||

| + | prev_dir = "" | ||

| + | URL = "http://projectalmanac.local/" | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | In this step, we initialize several variables that will be used to keep track of hand-related information and the previous direction. | ||

| + | |||

| + | A URL is defined to send HTTP requests to the hardware code of ca | ||

| + | |||

| + | '''Defining a Function to Send HTTP Requests'''<syntaxhighlight lang="python3"> | ||

| + | def send(link): | ||

| + | try: | ||

| + | response = requests.get(link) | ||

| + | print("Response ->", response) | ||

| + | except Exception as e: | ||

| + | print(f"Error sending HTTP request: {e}") | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | This step defines a function named send that takes a link as an argument and sends an HTTP GET request to the specified URL. It prints the response or an error message if the request fails. | ||

| + | |||

| + | |||

| + | These are the initial setup steps. The following steps are part of the main loop where video frames are processed for hand tracking and gesture recognition. I'll explain these steps one by one: | ||

| + | |||

| + | '''MediaPipe Hands Processing'''<syntaxhighlight lang="python3"> | ||

| + | ret, frame = cap.read() | ||

| + | frame = cv2.flip(frame, 1) | ||

| + | rgb_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) | ||

| + | results = hands.process(rgb_frame) | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | Inside the loop, it captures a frame from the camera (cap.read()) and flips it horizontally (cv2.flip) to mirror the image. | ||

| + | |||

| + | The code converts the captured frame to RGB format (cv2.cvtColor) and then uses the MediaPipe Hands module to process the frame (hands.process) for hand landmark detection. The results are stored in the results variable. | ||

| + | |||

| + | '''Hand Landmarks and Tracking'''<syntaxhighlight lang="python3"> | ||

| + | if results.multi_hand_landmarks: | ||

| + | hand_landmarks = results.multi_hand_landmarks[0] | ||

| + | index_finger_tip = hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP] | ||

| + | hand_y = int(index_finger_tip.y * height) | ||

| + | hand_x = int(index_finger_tip.x * width) | ||

| + | tracking = True | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | This section checks if hand landmarks are detected in the frame (results.multi_hand_landmarks). If so, it assumes there's only one hand in the frame and extracts the y-coordinate of the index finger tip. It updates hand_y and hand_x with the calculated coordinates and sets tracking to True. | ||

| + | |||

| + | '''Direction Calculation'''<syntaxhighlight lang="python3"> | ||

| + | frame_center = (width // 2, height // 2) | ||

| + | if trackin | ||

| + | direction = find_direction(frame, hand_y, hand_x, frame_center) | ||

| + | if(direction != prev_dir): | ||

| + | try: | ||

| + | link = URL+direction | ||

| + | http_thread = threading.Thread(target=send, args=(link,)) | ||

| + | http_thread.start() | ||

| + | except Exception as e: | ||

| + | print(e) | ||

| + | prev_dir = direction | ||

| + | print(direction) | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | In this step, the code calculates the center of the frame and, if tracking is active, it uses the find_direction function to calculate the direction based on the hand's position. The direction is stored in the direction variable. | ||

| + | |||

| + | We used current direction and previous direction variables. It helps in keeping a semaphore of sending only one HTTP request for every change in command. Then overall store it in a single URL to send the HTTP request. | ||

| + | |||

| + | '''Visualization'''<syntaxhighlight lang="python3"> | ||

| + | opacity = 0.8 | ||

| + | cv2.addWeighted(black_background, opacity, frame, 1 - opacity, 0, frame) | ||

| + | cv2.imshow("Project Almanac", frame) | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | If tracking is active, this section of the code adds visual elements to the frame, including a filled circle representing the index finger tip's position and text indicating the detected direction. | ||

| + | |||

| + | |||

| + | The code blends a black background with the original frame to create an overlay with adjusted opacity. The resulting frame is displayed in a window named "Project Almanac".</translate> | ||

| + | }} | ||

| + | {{Tuto Step | ||

| + | |Step_Title=<translate></translate> | ||

| + | |Step_Content=<translate></translate> | ||

}} | }} | ||

{{Notes | {{Notes | ||

| Ligne 27 : | Ligne 189 : | ||

}} | }} | ||

{{PageLang | {{PageLang | ||

| + | |Language=en | ||

|SourceLanguage=none | |SourceLanguage=none | ||

|IsTranslation=0 | |IsTranslation=0 | ||

| − | |||

}} | }} | ||

{{Tuto Status | {{Tuto Status | ||

|Complete=Draft | |Complete=Draft | ||

}} | }} | ||

Version du 26 septembre 2023 à 04:26

Introduction

Have you watched the movie 'Project Almanac'? Which was released in the year 2015. If not, then let me brief you a scene about it.

In the movie, the main character wishes to get into MIT and therefore, builds a project for his portfolio. The project was about a drone, that could be controlled using a 2.4GHz remote controller, but when the software application on the laptop was run, the main character was seen controlling the drone with his hands in the air! The software application used a webcam to track the the movement of the character's hand movements.

Matériaux

Outils

Étape 1 - Introduction

Have you watched the movie 'Project Almanac'? Which was released in the year 2015. If not, then let me brief you a scene about it.

In the movie, the main character wishes to get into MIT and therefore, builds a project for his portfolio. The project was about a drone, that could be controlled using a 2.4GHz remote controller, but when the software application on the laptop was run, the main character was seen controlling the drone with his hands in the air! The software application used a webcam to track the the movement of the character's hand movements.

Étape 2 - Custom PCB on your Way!

Modern methods of development got easier with software services. For hardware services, we have limited options. Hence PCBWay gives the opportunity to get custom PCB manufactured for hobby projects as well as sample pieces, in very little delivery time

Get a discount on the first order of 10 PCB Boards. Now, PCBWay also offers end-to-end options for our products including hardware enclosures. So, if you design PCBs, get them printed in a few steps!

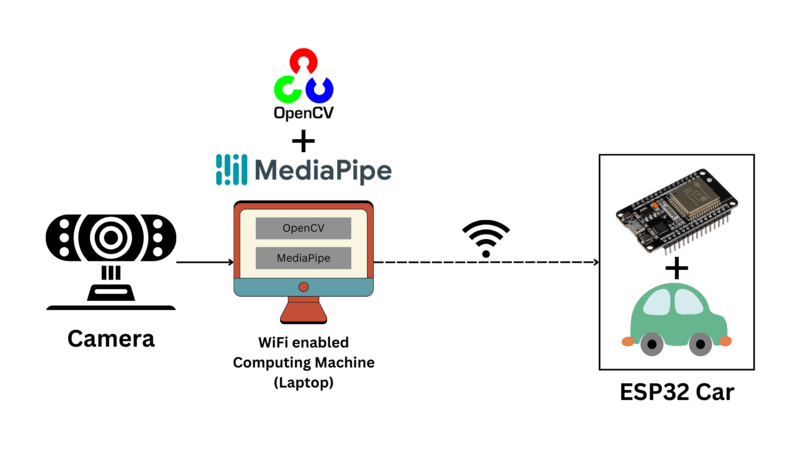

Étape 3 - Getting Started

As we already saw, this technology was well displayed in the movie scene. And the best part is, in 2023 it is super easy to rebuild it with great tools like OpenCV and MediaPipe. We will control a machine but with a small change in the method, than the one the character uses to let the camera scan his fingers.

He used color blob stickers on his fingertips so that the camera could detect those blobs. When there was a movement in the hands, which was visible from the camera, the laptop sent the signal to the drone to move accordingly. This allowed him to control the drone without any physical console.

Using the latest technological upgrades, we shall make a similar, but much simpler version, which can run on any embedded Linux system, making it portable even for an Android system. Using OpenCV and MediaPipe, let us see how we can control our 2wheeled battery-operated car, over a Wi-Fi network with our hands in the air!

Étape 4 - OpenCV and MediaPipe

OpenCV is an open-source computer vision library primarily designed for image and video analysis. It provides a rich set of tools and functions that enable computers to process and understand visual data. Here are some technical aspects.

- Image Processing: OpenCV offers a wide range of fuctions for image processing tasks such as filtering, enhancing, and manipulating images. It can perform operations like blurring, sharpening, and edge detection.

- Object Detection: OpenCV includes pre-trained models for object detection, allowing it to identify and locate objects within images or video streams. Techniques like Haar cascades and deep learning-based models are available.

- Feature Extraction: It can extract features from images, such as keypoints and descriptors, which are useful for tasks like image matching and recognition.

- Video Analysis: OpenCV enables video analysis, including motion tracking, background subtraction, and optical flow.

MediaPipe is an open-source framework developed by Google that provides tools and building blocks for building various types of real-time multimedia applications, particularly those related to computer vision and machine learning. It's designed to make it easier for developers to create applications that can process and understand video and camera inputs. Here's a breakdown of what MediaPipe does:

- Real-Time Processing: MediaPipe specializes in processing video and camera feeds in real-time. It's capable of handling live video streams from sources like webcams and mobile cameras.

- Cross-Platform: MediaPipe is designed to work across different platforms, including desktop, mobile, and embedded devices. This makes it versatile and suitable for a wide range of applications.

- Machine Learning Integration: MediaPipe seamlessly integrates with machine learning models, including TensorFlow Lite, which allows developers to incorporate deep learning capabilities into their applications. For example, you can use it to build applications that recognize gestures, detect facial expressions, or estimate the body's pose.

- Efficient and Optimized: MediaPipe is optimized for performance, making it suitable for real-time applications on resource-constrained devices. It takes advantage of hardware acceleration, such as GPU processing, to ensure smooth and efficient video processing.

From above if you have noticed, this project will require one feature from each of these tools, to be able to make our project work. Video Analysis from OpenCV and HandTracking from MediaPipe. Let us begin with the environment to be able to work seamlessly.

Étape 5 - Hand Tracking and Camera Frame UI

As we move ahead, we need to know how to use OpenCV and Mediapipe to detect hands. For this part, we shall use the Python library.

Make sure you have Python installed on the laptop, and please run below command to install the necessary libraries -

Run the command to install the libraries -python -m pip install opencv-python mediapipe requests numpy

To begin with the the control of car from the camera, let us understand how it will function -

- The camera must track the hands or fingers to control the movement of the car. We shall track the index finger on the camera for that.

- Based on the location of finger with reference to the given frame, there will be forward, backward, left, right and stop motion for the robot to function.

- While all the movements are tracked on real time, the interface program should send data while reading asynchronously.

To perform the above task in simple steps, methods used in the program have been simplified in beginner's level. Below is the final version!

As we see above, the interface is very simple and easy to use. Just move your index finger tip around, and use the frame as a console to control the robot. Read till the end and build along to watch it in action!

Étape 6 - Code - Software

Now that we know what the software UI would look like, let us begin to understand the UI and use HTTP request to send signal to the car to make actions accordingly.

Initializing MediaPipe Handsmp_hands = mp.solutions.hands

hands = mp_hands.Hands()

mp_drawing = mp.solutions.drawing_utils

Here, we initialize the MediaPipe Hands module for hand tracking. We create instances of mp.solutions.hands and mp.solutions.drawing_utils, which provide functions for hand detection and visualization.

tracking = False

hand_y = 0

hand_x = 0

prev_dir = ""

URL = "http://projectalmanac.local/"

In this step, we initialize several variables that will be used to keep track of hand-related information and the previous direction.

A URL is defined to send HTTP requests to the hardware code of ca

Defining a Function to Send HTTP Requestsdef send(link):

try:

response = requests.get(link)

print("Response ->", response)

except Exception as e:

print(f"Error sending HTTP request: {e}")

This step defines a function named send that takes a link as an argument and sends an HTTP GET request to the specified URL. It prints the response or an error message if the request fails.

These are the initial setup steps. The following steps are part of the main loop where video frames are processed for hand tracking and gesture recognition. I'll explain these steps one by one:

ret, frame = cap.read()

frame = cv2.flip(frame, 1)

rgb_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

results = hands.process(rgb_frame)

Inside the loop, it captures a frame from the camera (cap.read()) and flips it horizontally (cv2.flip) to mirror the image.

The code converts the captured frame to RGB format (cv2.cvtColor) and then uses the MediaPipe Hands module to process the frame (hands.process) for hand landmark detection. The results are stored in the results variable.

Hand Landmarks and Trackingif results.multi_hand_landmarks:

hand_landmarks = results.multi_hand_landmarks[0]

index_finger_tip = hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP]

hand_y = int(index_finger_tip.y * height)

hand_x = int(index_finger_tip.x * width)

tracking = True

This section checks if hand landmarks are detected in the frame (results.multi_hand_landmarks). If so, it assumes there's only one hand in the frame and extracts the y-coordinate of the index finger tip. It updates hand_y and hand_x with the calculated coordinates and sets tracking to True.

frame_center = (width // 2, height // 2)

if trackin

direction = find_direction(frame, hand_y, hand_x, frame_center)

if(direction != prev_dir):

try:

link = URL+direction

http_thread = threading.Thread(target=send, args=(link,))

http_thread.start()

except Exception as e:

print(e)

prev_dir = direction

print(direction)

In this step, the code calculates the center of the frame and, if tracking is active, it uses the find_direction function to calculate the direction based on the hand's position. The direction is stored in the direction variable.

We used current direction and previous direction variables. It helps in keeping a semaphore of sending only one HTTP request for every change in command. Then overall store it in a single URL to send the HTTP request.

Visualizationopacity = 0.8

cv2.addWeighted(black_background, opacity, frame, 1 - opacity, 0, frame)

cv2.imshow("Project Almanac", frame)

If tracking is active, this section of the code adds visual elements to the frame, including a filled circle representing the index finger tip's position and text indicating the detected direction.

The code blends a black background with the original frame to create an overlay with adjusted opacity. The resulting frame is displayed in a window named "Project Almanac".

Étape 7 -

Draft

Français

Français English

English Deutsch

Deutsch Español

Español Italiano

Italiano Português

Português